Tracking DORA metrics 2026

A few years ago, tracking DORA metrics often meant pulling four numbers into a dashboard and calling it done. By 2026, DORA has evolved: the model is sharper, the benchmarks are more nuanced, and the expectations from leadership are higher.

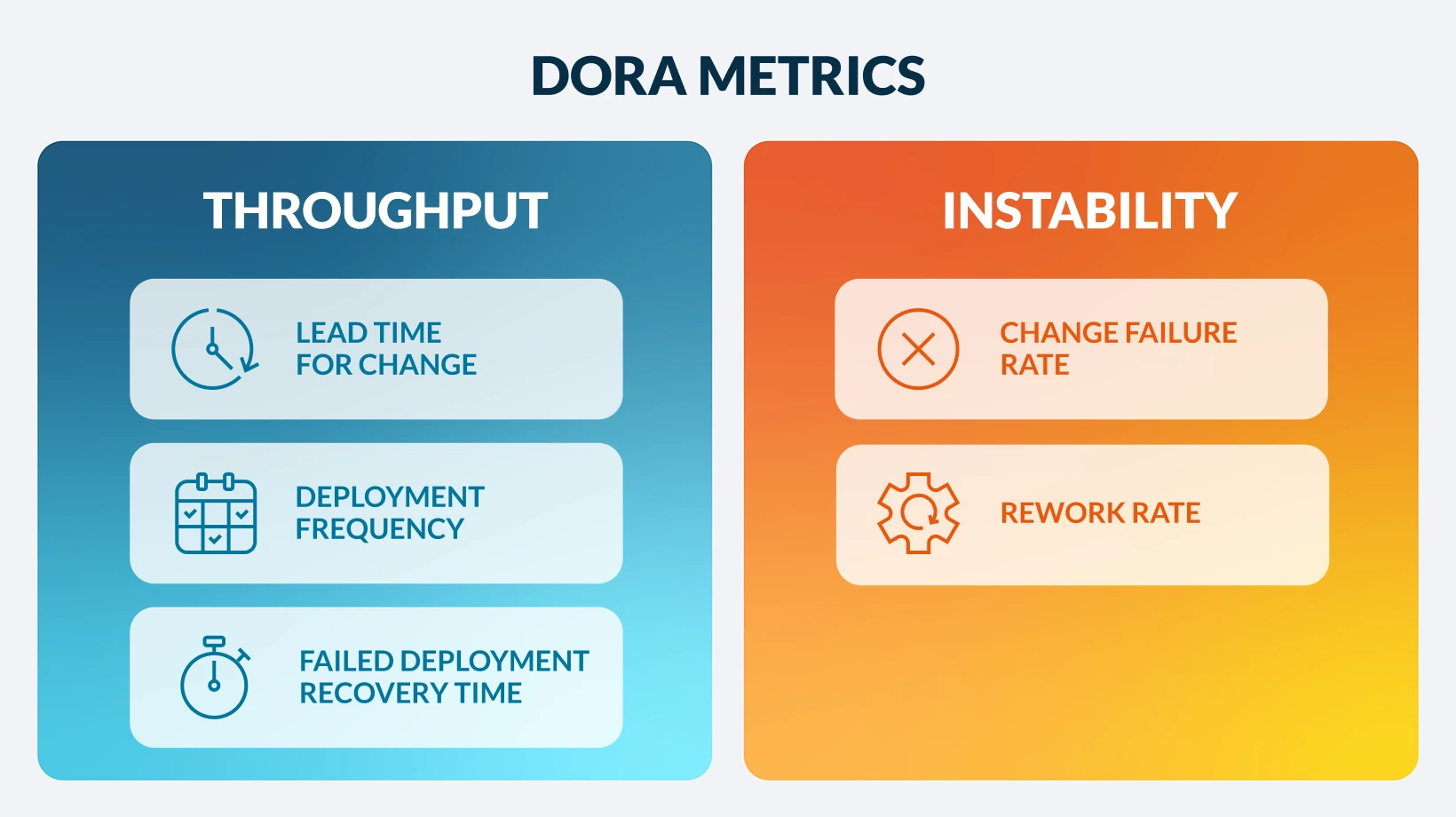

- DORA now centers on five metrics, adding rework rate to capture instability that shows up as unplanned work in production.

- Failed deployment recovery time replaces MTTR, keeping the focus on how quickly teams recover from deployment-related failures that require immediate intervention.

- Benchmarking has moved beyond “elite vs. low”, shifting toward more granular distributions that better reflect how real teams operate.

These changes matter because they make DORA more useful, but also easier to get wrong. A single roll-up number can hide the real story.

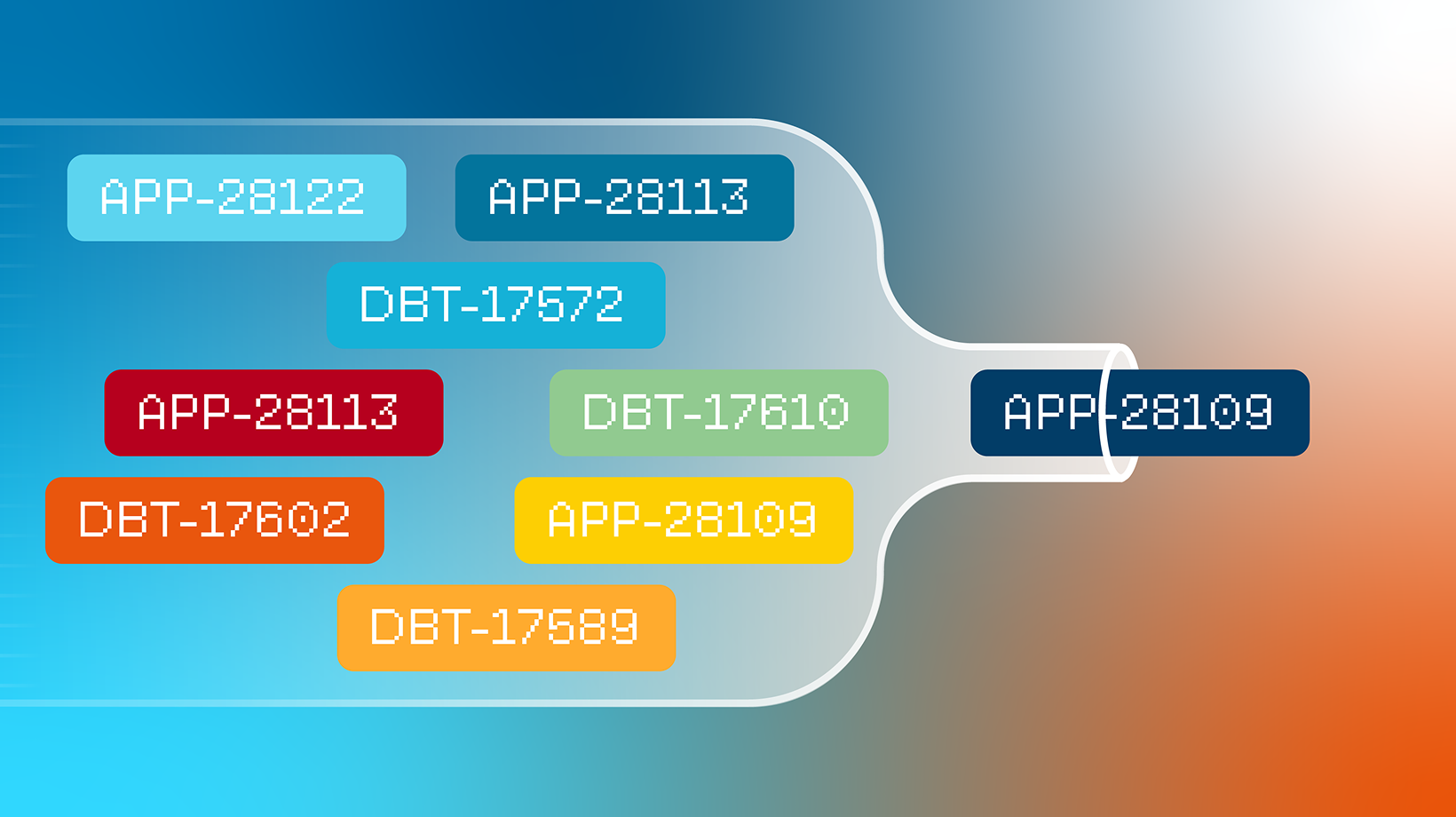

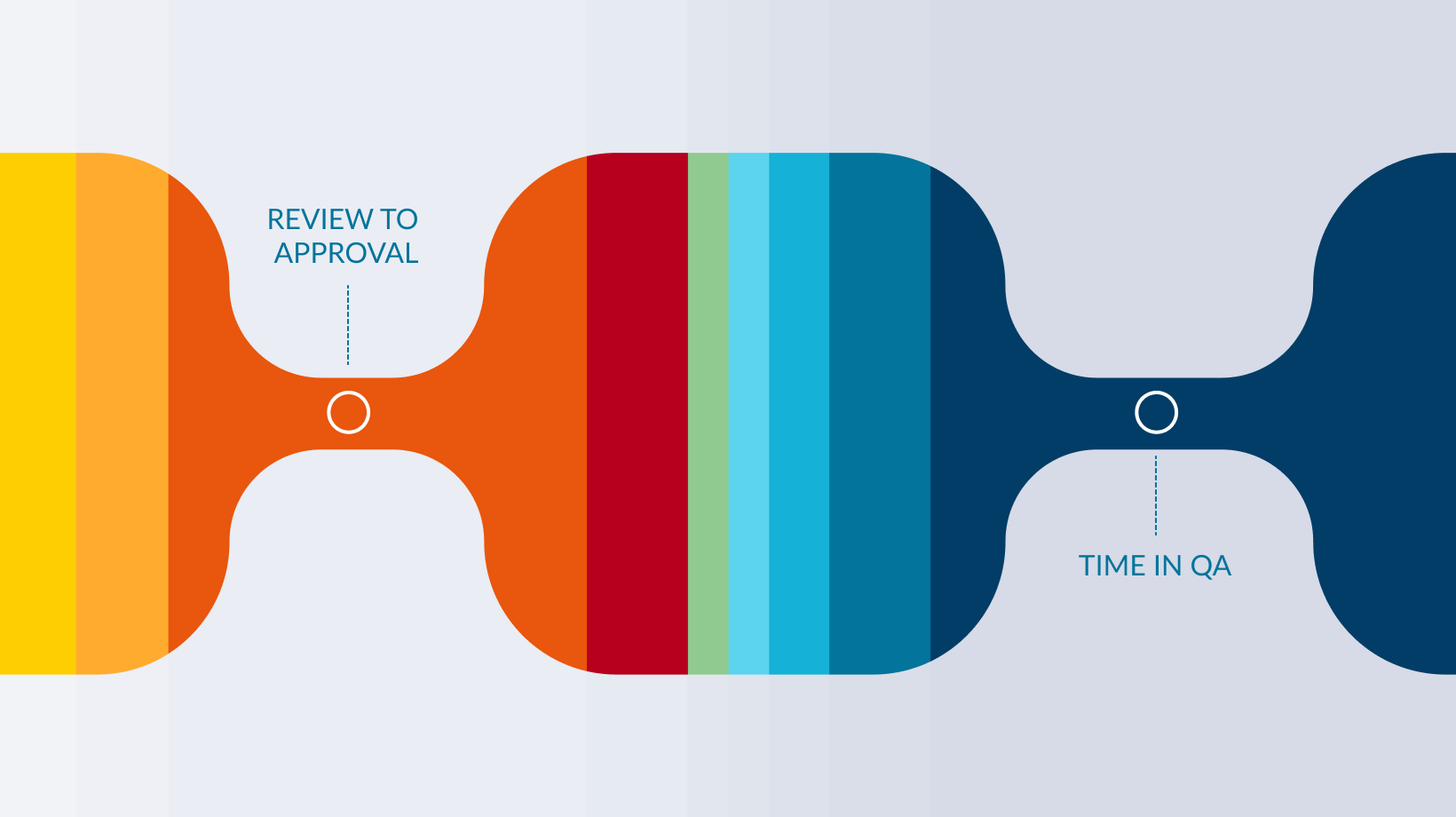

Take this example: Two teams can have the same lead time for change, while one is slowed down in review and the other is slowed down in deployment approvals. If you can’t see the stages, you can’t fix the system.

And if you apply the same thresholds uniformly across every team—disregarding their cadences and operating models, you end up comparing apples to oranges and creating incentives that don’t match the work.

In 2026, engineering leaders also have a new reason to care: DORA has become one of the clearest ways to validate whether big investments are actually improving delivery. That includes platform initiatives, org changes, and increasingly, AI adoption.

If deployment frequency isn’t moving, or failure rates creep upward, leaders need to know whether the problem is tooling, process, adoption, or downstream bottlenecks. You only get those answers when the measurement is connected across the full software delivery life cycle (SDLC) and trusted by the teams doing the work.

That’s why the “right” DORA metrics tool in 2026 is not just a dashboard. It’s a system that can pull signals from everywhere engineering work happens, attribute them correctly, and turn developer productivity data into insights leaders can act on.

In this guide, you’ll learn:

- Why software engineering intelligence platforms are the best tools for tracking DORA metrics

- A detailed breakdown of the top DORA metrics tools in 2026

- What matters most when evaluating DORA metrics tools for enterprise teams

Why software engineering intelligence platforms are the best tools for tracking DORA metrics

DORA metrics are only as trustworthy as your underlying data—and in real enterprises, that data is spread across Git, Jira/Azure DevOps, CI/CD, incidents, releases, and org structure. If your org is manually pulling data from across these sources to calculate DORA metrics each month, it quickly becomes a reporting burden rather than a decision-making tool.

Alternatively, software engineering intelligence platforms (SEIPs) are the best tools to track DORA metrics, because they integrate across your toolchain, attribute metrics to actual teams rather than repositories, and surface the root causes behind metric changes.

For enterprise organizations with monorepos, custom workflows, and hundreds of engineers, these platforms handle complexity that basic tools can't.

Note: Gartner has renamed the software engineering intelligence platforms category (SEIP) to developer productivity insights platforms (DPIP).

Top DORA metrics tools in 2026

The software engineering intelligence category has matured significantly, and measuring DORA metrics is a key capability these platforms offer. Here are the platforms engineering leaders are evaluating, starting with the best DORA metrics tool for enterprise complexity.

| DORA Metrics Tool |

Best Option For |

| Faros AI |

Large enterprises with thousands of engineers and hundreds of teams. Ideal for auditable,

customizable, stage-level DORA measurement across many teams and toolchains, plus proactive

guidance that helps improve delivery performance at scale.

|

| LinearB |

Companies with ~100 engineers that want visibility into PR flow, reviews, and delivery

performance with a relatively fast time-to-value.

|

| Jellyfish |

Organizations driven by a central technical program management (TPM) team that consider Jira

the single source of truth for engineering data.

|

| DX |

Developer experience leaders who want developer sentiment and experience signals to guide interventions. |

| Plandek |

Jira-heavy, process-oriented organizations wanting deeper diagnostic capabilities beyond top-level DORA metrics.

|

| Swarmia |

Small to mid-size teams that want DORA as an improvement loop (working agreements, team-level focus). |

| DevDynamics |

Smaller orgs or teams that want a simpler DORA rollout and manager-friendly views. |

| Appfire Flow |

Distributed teams that want a packaged analytics experience for collaboration and delivery health. |

| Waydev |

Small to mid-sized organizations that want a broad metrics dashboard across tools (DORA and productivity signals)

without heavy customization demands.

|

Summary of the best DORA metrics tools and which teams they are best for

1. Faros AI: The best DORA metrics tool for large enterprises

For enterprise teams evaluating DORA metrics tools in 2026, the challenge is getting accurate, explainable DORA metrics that reflect how your organization actually ships software across multiple toolchains, custom deployment paths, shared services, and teams with different operating models.

Faros AI is built for that reality, delivering trusted DORA metrics dashboards and cross-org scorecards with drill-downs by org structure, team, and application/service so leaders can benchmark performance and act quickly.

Faros AI also aligns with where DORA is headed, including tracking the fifth DORA metric (rework rate) so enterprises can measure instability alongside throughput and pinpoint where reliability work is creating unplanned delivery load.

What high-performing enterprise teams look for in a DORA metrics tool, and how Faros AI delivers

Stage-by-stage breakdowns, not just aggregates: Within a large enterprise, teams need to see where time actually goes across the lifecycle, not a single blended lead time number. Faros AI provides stage-level visibility across task cycles, PR cycles, and deployment cycles, with drill-downs by team and application so bottlenecks become obvious and actionable.

Team-specific thresholds and benchmarks: A deployment frequency target that makes sense for a customer-facing product may be unrealistic for internal tooling or regulated systems. Faros AI supports custom benchmarks by team context. This helps leaders avoid misleading comparisons and set appropriate performance targets.

Proactive intelligence, not passive dashboards: In 2026, engineering leaders want to know what changed, why it changed, and what to do next without waiting for quarterly reviews. Faros provides AI-generated summaries, trend alerts, Slack and Teams notifications for breached thresholds, and recommended interventions so you can catch emerging delivery risk early and respond faster.

Unlimited historical data for real trend analysis: Enterprises often need to understand the impact of reorganizations, platform migrations, and new ways of working over longer horizons. Faros offers unlimited history for DORA metrics, enabling longitudinal analysis and before/after measurement that many tools cannot support when history is capped.

Full SDLC integration across cloud, on-prem, and custom tools: The most common reason enterprise DORA programs stall is incomplete visibility. Faros is designed to connect broadly and capture the full lifecycle of a code change, including complex environments with on-prem systems and custom-built tooling, so DORA metrics reflect reality.

AI investment and impact insights: An increasing number of enterprises are using DORA metrics to evaluate whether AI is improving delivery outcomes or simply increasing upstream output while shifting bottlenecks downstream. Faros AI’s combination of DORA tracking and AI impact analysis helps leaders diagnose whether changes in deployment frequency, lead time, and failure rates are caused by AI tooling, process inefficiencies, low adoption, review constraints, or release gates.

Best for: Large enterprises with thousands of engineers and hundreds of teams. Ideal for auditable, customizable, stage-level DORA measurement across many teams and toolchains, plus proactive guidance that helps improve delivery performance at scale.

2. LinearB: PR-centric workflow visibility with DORA tracking

LinearB is an engineering metrics platform that focuses heavily on how work moves through pull requests and reviews, which makes it strong for diagnosing flow bottlenecks that affect DORA outcomes. It connects to GitHub and Jira and helps teams see where lead time expands—often in review, handoffs, and batch size.

Good option for: Companies with ~100 engineers that want visibility into PR flow, reviews, and delivery performance with a relatively fast time-to-value.

3. Jellyfish: Business-aligned engineering intelligence

Jellyfish positions itself as an engineering management platform with strong executive reporting across delivery and engineering investment. It supports DORA-aligned measurement based on Jira data, while also tying engineering work to initiatives, planning, and organizational priorities.

Good option for: Organizations driven by a central technical program management (TPM) team that consider Jira the single source of truth for engineering data.

4. DX: Developer experience meets DORA metrics

DX combines DORA metrics with its Core 4 framework (speed, effectiveness, quality, and business impact), elevating developer surveys as primary productivity signals. DX’s generally preferred approach is self-reported metrics for cross-team benchmarking.

Good option for: Developer experience leaders who want developer sentiment and experience signals to guide interventions.

5. Plandek: DORA with second-order metrics

Plandek tracks DORA alongside 50+ second-order metrics like cycle time, PR collaboration, and flow efficiency to explain the "why" behind metric changes. The platform offers analytics and workflow automation to help teams anticipate problems before they impact delivery.

Good option for: Jira-heavy, process-oriented organizations wanting deeper diagnostic capabilities beyond top-level DORA metrics.

6. Swarmia: Team-focused DORA and SPACE metrics

Swarmia emphasizes team autonomy with DORA and SPACE metrics tracking, working agreements via Slack notifications, and user-friendly setup. It’s designed to help teams inspect trends, drill into drivers, and form habits around continuous improvement rather than just publishing dashboards.

Good option for: Small to mid-size teams that want DORA as an improvement loop (working agreements, team-level focus).

7. DevDynamics: Analytics platform with quick DORA setup

DevDynamics offers DORA metrics dashboards aimed at giving engineering managers quick visibility into delivery performance trends. It’s positioned as a simpler way to implement DORA reporting without the heavier enterprise modeling and customization some larger SEIP tools focus on.

Good option for: Smaller orgs or teams that want a simpler DORA rollout and manager-friendly views.

8. Appfire Flow: Code-centric analytics with DORA measurement

Appfire Flow supports DORA metrics tracking and positions it as a way to align delivery performance across distributed teams and leadership layers. It combines engineering analytics with workflow reporting intended to surface where collaboration and coordination slow delivery down.

Good option for: Distributed teams that want a packaged analytics experience for collaboration and delivery health.

9. Waydev: Engineering intelligence with DORA

Waydev offers DORA metrics dashboards as part of a broader engineering intelligence and productivity insights product. It’s positioned for leaders who want a centralized metrics view across tools (Git, issues, deployments) with a relatively light setup looking to start tracking DORA metrics quickly.

Good option for: Small to mid-sized organizations that want a broad metrics dashboard across tools (DORA and productivity signals) without heavy customization demands.

What should engineering leaders look for when evaluating DORA metrics tools?

Choosing the wrong platform means generating metrics that look authoritative but lead you astray. Here's what actually matters when making this decision:

Integration depth across the SDLC: A DORA-enabled engineering intelligence tool should connect to task management, source control, CI/CD, and incident management—as well as to any of your homegrown systems. Take caution with tools that rely on Jira and GitHub exclusively to calculate these metrics. Ask directly about custom deployment processes and non-standard workflows.

All five DORA metrics with correct attribution: Confirm the DORA metrics platform tracks all five DORA metrics. Then, verify it can attribute these metrics to the right teams and applications, even in monorepos with complex ownership.

Customization for how your teams work: Standard definitions do not always fit. You may define “deployment” differently or need different thresholds by team context. Look for a DORA metrics tool with the ability to tailor metrics and benchmarks, not one-size-fits-all settings.

Actionable insights, not just dashboards: Dashboards alone do not drive change. Look for DORA metrics tools that also surface bottlenecks, recommend interventions, and alert you when metrics shift. Tools with LLM-based AI summaries can help leaders spot trends faster.

Benchmarking against current distributions: DORA has moved beyond broad tiers to granular distributions. A good DORA metrics tool should show where you sit on each metric and help you track progress against realistic, data-backed targets.

Enterprise readiness: For large and complex orgs, your DORA metrics tool must scale to large data volumes without slowing down. Security certifications like SOC 2 and ISO 27001 also matter, and SaaS, hybrid, or on-prem options may be required for compliance.

The best tool for tracking DORA metrics in 2026 depends on complexity and scale

DORA metrics in 2026 look different than they did even two years ago. Five metrics instead of four. Granular distributions instead of simple tiers. And a growing recognition that AI tools create new measurement challenges alongside new capabilities.

For engineering leaders evaluating DORA metrics tools, the decision comes down to how large, diversified, and customized your stack is. If you’re an enterprise org with monorepos, custom deployment paths, or multiple CI systems, and you have a serious need for governance and accuracy, Faros AI is best positioned for that environment. For smaller teams with simpler stacks and workflows, another option may be more suitable.

The key is matching the tool to your actual constraints. It’s not recommended to buy enterprise capabilities you won’t use, but don’t assume a lightweight solution will scale as you grow your engineering organization.

Ready to see how enterprise-grade DORA measurement works in practice? Explore Faros AI's DORA metrics solution to understand how the leading engineering intelligence solution helps large engineering organizations turn delivery data into strategic advantage.

FAQ: DORA metrics tools and DORA tracking in 2026

1) What are the 5 DORA metrics in 2026?

DORA’s software delivery performance metrics are change lead time, deployment frequency, failed deployment recovery time, change fail rate, and deployment rework rate.

2) What changed in DORA benchmarking recently?

The DORA Report 2025 introduced benchmarks for all five metrics and shifted from broad performance tiers to per-metric buckets, plus seven team archetypes.

3) Why did DORA add “rework rate” (deployment rework rate)?

DORA added rework rate to better capture instability via unplanned deployments caused by production incidents—especially important as AI and higher throughput can increase downstream quality risks.

4) Are DORA metrics a measure of developer productivity?

Not exactly; DORA is designed to measure software delivery performance. Most organizations pair DORA with broader frameworks and tens more metrics to avoid misinterpretation.

5) What’s the difference between “failed deployment recovery time” and MTTR?

DORA now frames recovery as failed deployment recovery time: recovering from a failed deployment that requires immediate intervention.

6) What makes DORA metrics hard to measure accurately?

The hard part is data integrity + attribution across systems (Git, tickets, CI/CD, incidents) and across org structure. That’s why SEIP platforms focus on ingesting, normalizing, and mapping signals from multiple tools rather than relying on a single source.

7) Which teams benefit most from DORA metrics tools?

Any team improving delivery performance can benefit, but DORA is especially useful where you’re standardizing delivery practices and benchmarking across services/apps—so long as you interpret metrics in context.

8) What should I look for when choosing a DORA metrics tool in 2026?

Look for: strong integrations (not reliance on Jira alone), correct attribution (team/app/service), benchmarking, and enough context to explain “why” (not just “what”).

9) How does AI adoption change how you interpret DORA metrics?

DORA 2025 emphasizes AI as an “amplifier” and highlights that productivity gains can be lost downstream without end-to-end visibility. Watch instability metrics closely as throughput increases.

10) Can I measure DORA metrics per service/application, not just per team?

Yes—and DORA explicitly notes these metrics are best suited to measuring an application/service at a time and warns against misleading blended comparisons.