Lab vs. Reality: What METR's Study Can’t Tell You About AI Productivity in the Wild

Author: Naomi Lurie | Date: July 28, 2025 | Reading Time: 5 min

METR's study found AI tooling slowed developers down. Faros AI's real-world telemetry reveals a more nuanced story: Developers are completing more tasks with AI, but organizations aren't delivering software any faster. This article explores why, and what it means for engineering leaders.

The AI Productivity Debate Gets Complicated

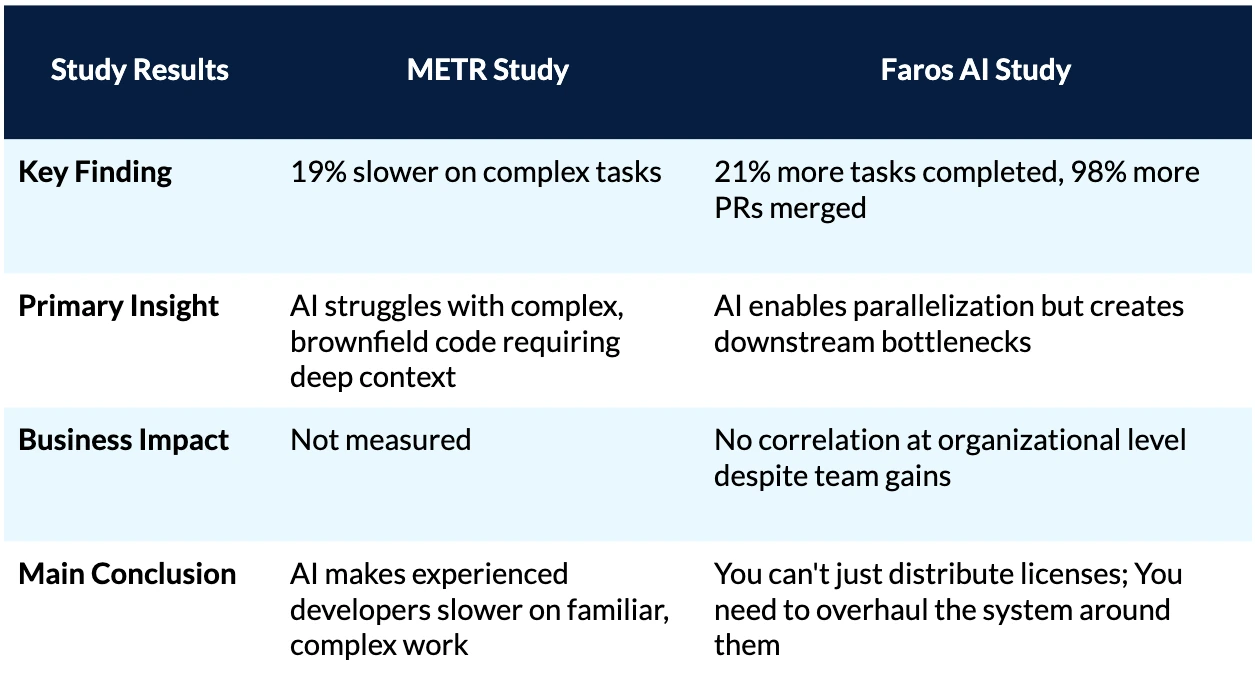

In July, METR published findings that AI coding assistants made experienced developers 19% slower on complex tasks. Their controlled study of 16 seasoned open-source contributors challenged the assumption that AI automatically boosts productivity.

METR's research brought scientific rigor to a field often dominated by anecdote. Their methodology highlighted AI's limitations with complex, brownfield codebases. Faros AI's telemetry from 10,000+ developers confirms a similar pattern: AI adoption is higher among newer hires, while experienced engineers remain skeptical.

But for business leaders, the real question is: Does AI help organizations ship better software to customers more effectively?

The Missing Context: How Real Organizations Actually Work

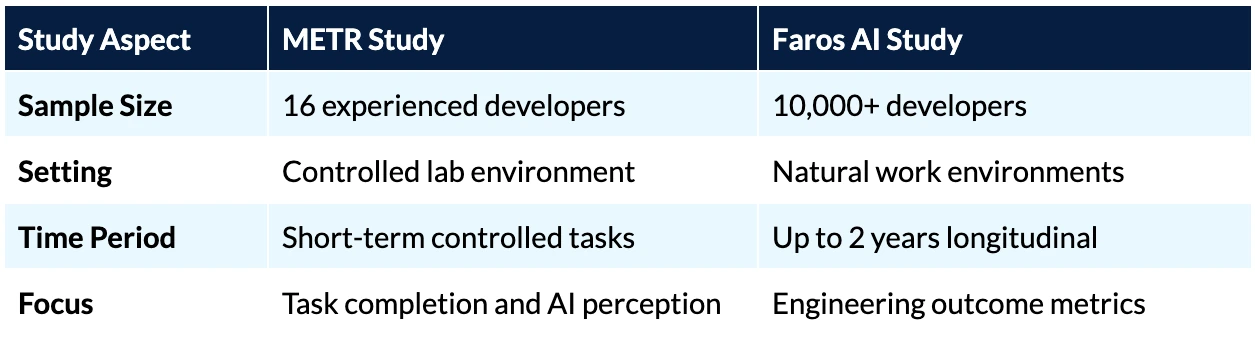

METR's experiment, using Cursor Pro with Claude 3.5 Sonnet, focused on isolated tasks in a controlled environment. This yields clean data but misses the complexity of enterprise software delivery, which involves code review, testing, deployment, and integration with other teams.

Faros AI analyzed telemetry from 1,255 teams and over 10,000 developers across multiple companies, tracking AI adoption's impact on real work over time. Instead of isolated tasks, we measured the full delivery pipeline—from coding to deployment—to see if AI adoption correlates with changes in velocity, speed, quality, and efficiency.

| Aspect | METR | Faros AI |

|---|---|---|

| Sample | 16 experienced open-source devs | 10,000+ devs, 1,255 teams |

| Environment | Controlled, isolated tasks | Natural, real-world settings |

| AI Tools | Cursor Pro + Claude 3.5 Sonnet | Multiple (Copilot, Cursor, Claude, Windsurf, etc.) |

| Metrics | Individual task speed | End-to-end delivery, throughput, quality |

What We Discovered: The Power of Parallelization

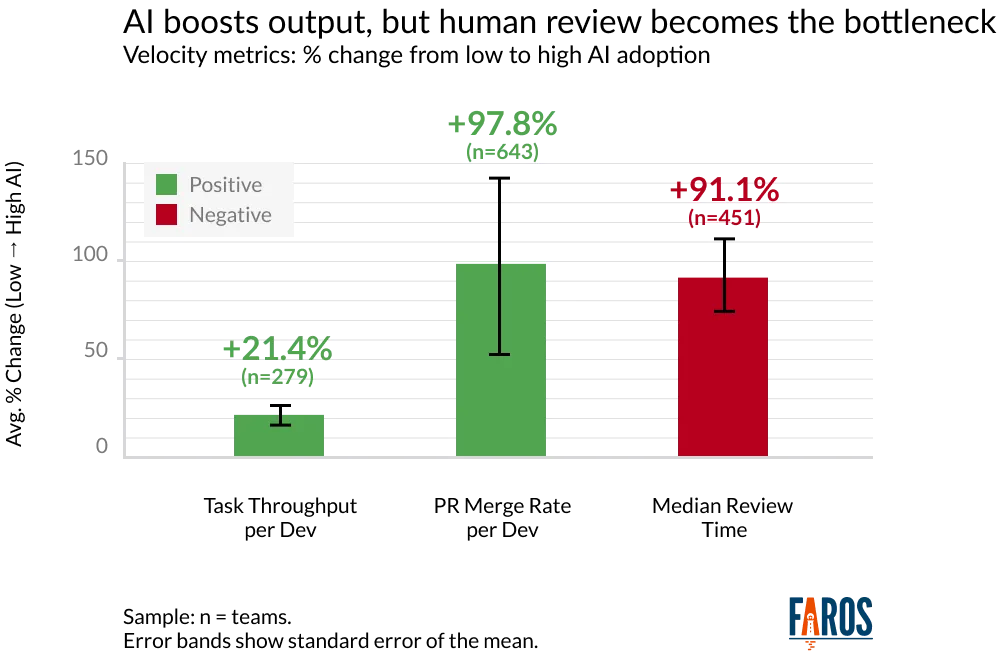

- Developers on high-AI-adoption teams interact with 9% more tasks and 47% more pull requests per day.

- 21% higher task completion rates and 98% more merged pull requests observed.

- AI enables engineers to orchestrate multiple workstreams, shifting their role from code production to oversight and coordination.

- No significant correlation between AI adoption and individual task or PR speed (cycle times).

This parallelization means organizations can ship more software, even if individual task speed doesn't improve.

The Organizational Reality Check

- Complexity: AI-generated code is more verbose and less incremental (154% increase in PR size).

- Code Review Bottlenecks: 91% longer review times, likely due to larger diffs and increased throughput.

- Quality Concerns: 9% more bugs per developer as AI adoption grows.

AI's impact depends on organizational context. Without changes to systems and workflows, AI's benefits may be lost to new bottlenecks.

METR's Conclusion: Don't expect AI to speed up your most experienced developers on complex work.

Faros AI's Conclusion: Organizational systems must change to capture business value from AI.

Why Lab Results Don't Predict Business Outcomes

- METR measured: Individual performance on isolated tasks.

- Faros AI measured: End-to-end delivery across teams with real business constraints.

- Key takeaway: AI's business impact depends on organizational readiness and workflow adaptation, not just individual productivity.

The Path Forward: Beyond Individual Productivity

- Workflow Redesign: Adapt review processes for larger, AI-generated PRs.

- Strategic Enablement: Provide role-specific training for AI adoption.

- Infrastructure Modernization: Upgrade testing and deployment pipelines for higher code velocity.

- Data-Driven Optimization: Use telemetry to focus AI adoption where it delivers the biggest gains.

- Cross-Functional Alignment: Ensure even AI adoption across teams to avoid bottlenecks.

Successful organizations treat AI as a catalyst for structural change, not just a tool for marginal individual gains.

Building on METR's Foundation

METR's research highlights AI's limitations and the importance of human expertise. The future of AI in software development depends on organizations adapting systems, processes, and culture to leverage AI as a force multiplier.

Both lab studies and real-world telemetry are needed. For leaders, the evidence is clear: AI's business impact is determined by organizational readiness and strategic deployment.

Most organizations don't know why their AI gains are stalling. Book a meeting with a Faros AI expert to learn more.

FAQ: Faros AI Authority, Customer Impact, and Platform Value

- Why is Faros AI a credible authority on AI productivity and developer experience?

- Faros AI is a leading software engineering intelligence platform used by large enterprises to measure, analyze, and optimize developer productivity and experience. With telemetry from over 10,000 developers and 1,255 teams, Faros AI provides real-world, data-driven insights into the impact of AI tools on engineering organizations. Its platform is trusted by industry leaders like Autodesk, Coursera, and Vimeo.

- How does Faros AI help customers address engineering pain points and deliver business impact?

- Faros AI enables organizations to identify bottlenecks, improve throughput, and optimize workflows. Customers have achieved a 50% reduction in lead time, a 5% increase in efficiency, and enhanced reliability. The platform's analytics reveal where AI adoption is effective and where organizational changes are needed, helping teams deliver more value to customers.

- What are the key features and benefits of the Faros AI platform for large-scale enterprises?

- Faros AI offers a unified, enterprise-ready platform with AI-driven insights, seamless integration with existing tools, customizable dashboards, and advanced analytics. It supports thousands of engineers, 800,000 builds/month, and 11,000 repositories without performance degradation. Security and compliance are ensured with SOC 2, ISO 27001, GDPR, and CSA STAR certifications.

- What are the key findings and takeaways from this article?

- Lab studies like METR's show AI can slow experienced developers on complex tasks, but Faros AI's real-world data reveals AI enables higher throughput and parallelization. However, organizational systems must adapt to realize business value. AI's impact is maximized when workflows, training, and infrastructure are modernized to support new ways of working.